We often think about verification as something that is done toward the end of the development phase of a program, which must be concluded just prior to entering the operational phase. While that is true in terms of execution and reporting of the final, formal verification activities, in reality, the process should start much earlier, back at the same time that the originating requirements are captured, refined, and allocated.

There are several reasons for starting the verification process early with a verification planning phase. First, writing verification requirements at the same time as the corresponding requirements themselves helps validate the requirements, as it requires the team to think more about the implication of the requirements. This can generate follow-up conversations with users, customers, and other stakeholders to further clarify and refine the requirements.

Second, this activity forces the team to resolve any ambiguity in the requirements themselves. All too often, vague, ambiguous requirements are allowed to persist well into the detailed design phase of a program. The risk here is that every individual, including the detailed design engineers making implementation decisions, will fill in the ambiguity with their own interpretations. This inevitably leads to confusion and issues farther downstream during the final verification when these assumptions are finally exposed. Leaving this ambiguity to fester only creates technical, cost, and schedule issues.

Writing verification requirements early forces the team to think concretely about the means in which the requirements will be verified. That, in turn, requires clarity related to purpose, the more careful usage of words often used too cavalierly in ways that can invoke mathematical logic constructions (and, or, if, while, when, etc.), refraining from using unverifiable words (such as “maximize,” “minimize,” “optimize,” etc.), defining success criteria (how many times does the feature, performance criteria, etc. have to be demonstrated?), phase, level, etc. This thought process leads to better requirements early, which reaps benefits throughout the remainder of the project’s lifecycle.

What about Validation?

We typically hear and use the phrase “verification and validation,” but then end up only addressing verification. Before we move forward, let’s quickly review both of these concepts.

Rather than provide formal definitions from a textbook or standard, I will summarize them using short phrases that capture the key points:

• Verification answers the question, “Did I build it right?”

• Validation answers the question, “Did I build the right thing?”

Once a requirement is written, it must be verified. The originating requirements, which were translated by someone from statements of customer needs in an operational environment, require validation:

Was that translation correct?

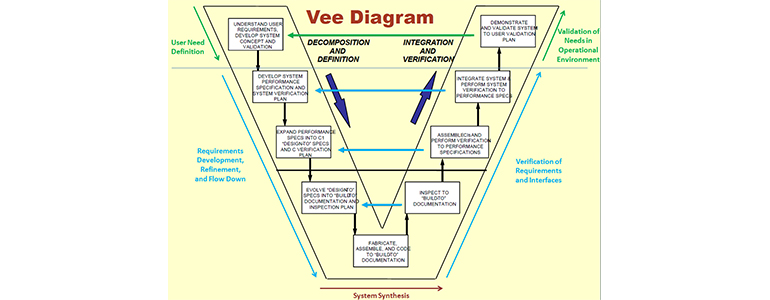

The figure at the top of this article shows both verification and validation in the context of the traditional systems engineering “vee.” Going through the early step of writing verification requirements, and going back and engaging your customers and stakeholders to explicitly ask them if the captured requirements accurately reflect their desirements, helps validate that the requirements are an accurate capture of their needs. If this requirements validation step is done early, it helps align and overlap the formal verification and validation activities. However, once system level verification is complete, it is still a best practice to validate the system in its actual operational environment under realistic operating conditions, external threats, and external system interactions. Only when those activities have been conducted can the system truly be considered validated.

An End-to-End Verification & Validation Modeling Approach

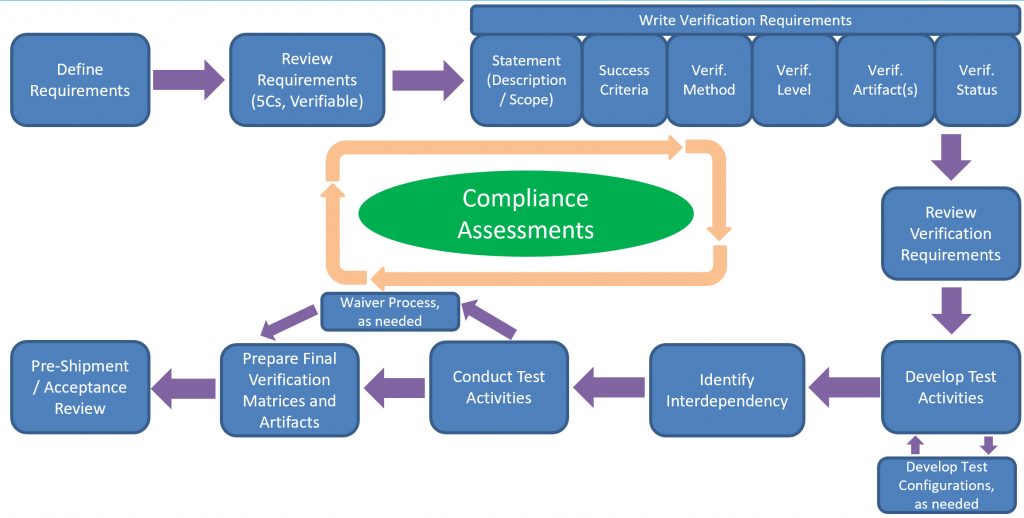

With this understanding in mind, a simple end-to-end verification process can be laid out, starting with the definition of the requirements and extending through the acceptance of the system by the customer. The major steps are shown in Figure 1.

Figure 1: An End-to-End Verification Process

As the requirements are written and refined, verification requirements are also written, as discussed previously. The verification requirements should include the definition of the method (test, analysis, demonstration, inspection), the level (at the same hierarchical level as the requirement, lower, higher), the program phase (if applicable), a description of the activity that will take place, and the success criteria (what must the outcome be for the verification to be considered successful)? A requirement may have more than one verification requirement. For example, a structural loading requirement may have a verification requirement that first verifies it earlier in the program lifecycle by structural analysis and then later via a structural proof loading test.

Once the verification requirements are written, they can be mapped to test activities, which are the events that are executed to conduct the verifications. If needed, test configurations defining any special test facilities, equipment, personnel, etc., may also be specified. Once the test activities are generated, they can be sequenced to identify needed predecessor/successor relationships. The activities are then executed. If any of the activities result in the associated success criteria not being met, the team must decide whether to re-test, re-design, or go through the waiver or deviation process. Once all verification and validation activities are completed successfully or granted a waiver/deviation, final summary verification matrices can be generated and reviewed at the final review.

Modeling Verification Requirements in GENESYS

GENESYS provides a complete verification and validation facility, which includes verification requirement, test activity, test configuration, and verification event classes, along with the associated relationships. These capabilities enable the engineer to capture the full end-to-end verification process described in Figure 1.

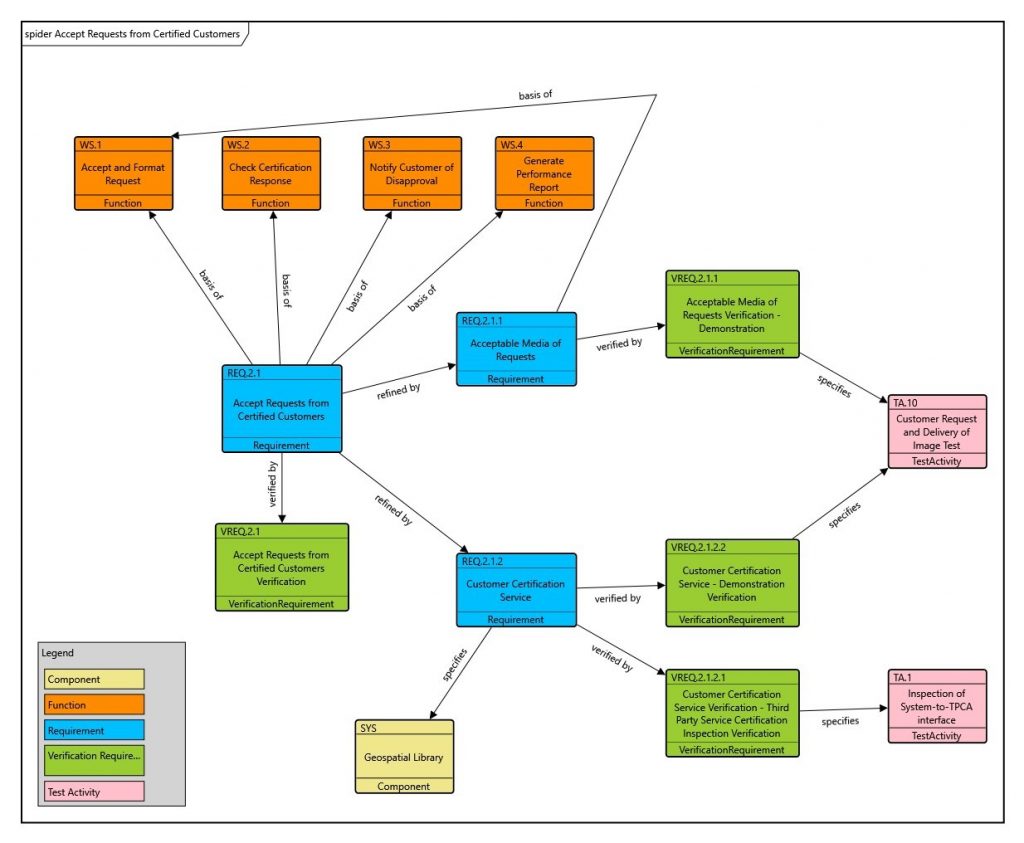

Figure 2 shows a set of verification requirements that have been developed and related to their associated requirements. Test activities and their relationships to verification requirements are also shown.

Figure 2: Spider Diagram Showing the Relationships Between Requirements, Verification Requirements, and Test Activities

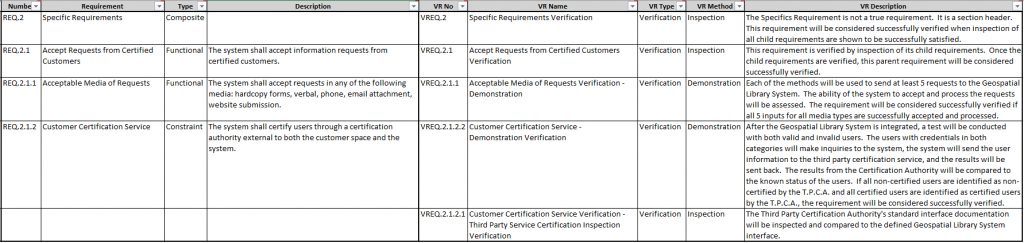

Verification matrices can be generated via the GENESYS Excel Connector. An example is shown in Table 1.

Table 1: Sample Verification Planning Table

The same process can be used for validation. One must just mentally replace “verification requirement” with “validation requirement,” but the same class can be used. The “type” attribute in verification requirement can be used to define whether it is a verification requirement or validation requirement. Operational environmental conditions, users/actors interacting with the system, and other external system interactions are all critical elements to account for when developing verification requirements and associated test activities.

Summary

A comprehensive verification and validation process that begins at a program’s inception can significantly increase clarity and communication within the program team, leading to better designs the first time, with fewer verification execution issues late in the project’s development phase. This approach can help reduce cost and schedule overruns. GENESYS provides the modeling capabilities to support the verification planning through the tracking of verification results and the development of verification matrices and associated reports.

Is there a good report template and/or a way to integrate this verification matrix to a report? I’d like to be able to export project summary information in a report-like format for the final submission document but I’m finding the reporting tool doesn’t have built-in verification plans. Thanks in advance for any assistance you can provide.

Hi Jessica. Great question. There are some verification templates in GENESYS, but they all need some attention and rework on our side. We’ll be addressing those soon! Thanks for bringing it up!